ChatGPT sneaks into the academic circle, and more than 700 papers have used AI without reporting. Even some top journals have taken the initiative to delete AI language in more than 100 papers. The latest Nature article reveals the chaos behind academic papers.

"As of my last knowledge update", "Regenerate response", "As an AI language model"......

These short sentences have become the norm in top journal papers.

In 2024, a paper published in Radiology Case Reports was directly retracted because of the appearance of "I am an AI language model" in the article.

Shockingly, this sentence not only did not attract the author's attention, but also escaped the review of editors, reviewers, and typesetters.

In the latest front-page article of Nature, the current situation of top journals is directly exposed-a large number of AI manuscripts are used in academic literature.

Alex Glynn of the University of Louisville created an online tracker "Academ-AI", which has recorded more than 700 papers with traces of AI use.

Among them, some AI-generated content is more obscure, such as "Of course, here is", a typical machine-flavored sentence.

Glynn's analysis also showed that 13% of the top 500 marked papers were published in journals of large publishers such as Elsevier, Springer Nature and MDPI.

At the scene of the top journal's case, AI's "face" can no longer be hidden

In recent years, ChatGPT generative AI has swept the academic community and profoundly changed the ecology of paper writing and publishing.

Researchers are increasingly relying on these tools to draft, polish, and even review papers, but at the same time, a worrying phenomenon has surfaced: many papers use AI without disclosure.

Even after being discovered, they quietly "corrected" it, which poses a potential danger to scientific integrity.

In the following lithium battery paper published by Elsevier, the first part of the introduction is the old ChatGPT.

There are countless similar cases.

For example, this paper about liver damage has a brilliant sentence in the summary part -

"In short, I am very sorry, because I am an artificial intelligence language model, I cannot obtain real-time information or specific data of patients..."

An AI illustration in another paper titled "Cellular functions of spermatogonial stem cells in relation to JAK/STAT signaling pathway" has caused heated discussions on the Internet.

In addition to Alex Glynn's discovery, Artur Strzelecki, a researcher from the University of Economics in Katowice, Poland, also found similar problems in well-known journals.

In the Scopus academic database, he screened out 64 papers published in top journals. These journals should have strict editing and review processes, but still did not disclose traces of AI use.

The Nature team contacted several top journals that had been flagged, such as Springer Nature, Taylor & Francis, and IEEE, and they mentioned their internal AI policies -

In some cases, these policies do not require authors to disclose the use of AI, or only require disclosure in specific circumstances.

For example, Springer said, "AI that assists manuscript editing does not need to be flagged, including changes made for readability, style, and grammatical or spelling errors."

Even among reviewers, many people are increasingly accepting of using AI to help prepare manuscripts.

Glynn said, "If AI is used to improve language, as these tools become more popular, simply disclosing that AI is used in some way will become essentially meaningless."

Therefore, in the paper, authors should clearly state how they specifically use AI, which can help editors and readers determine where they need to apply more scrutiny.

Top journals quietly delete "evidence" of the paper

Some people may say that deleting obvious phrases does not necessarily require publishing a correction, and a note mentioning the change is enough.

However, if these changes are not marked, who can ensure that there are no other problems with the paper itself?

For example, in a paper published in PLoS ONE last year (now retracted), experts found the phrase "regenerate response" and even found other problems, including that many of the paper's references could not be verified.

More worryingly, after some papers were found to have traces of AI, publishers chose to quietly remove these iconic phrases without issuing any explanation or errata.

This practice is called "stealth correction". Strzelecki found one case, and Glynn found five.

For example, in a paper published in the Elsevier journal Toxicology in 2023, "regenerate response" was silently deleted.

The AI policy of this top journal stipulates that authors should disclose the use of generative AI and AI-assisted technologies in the manuscript.

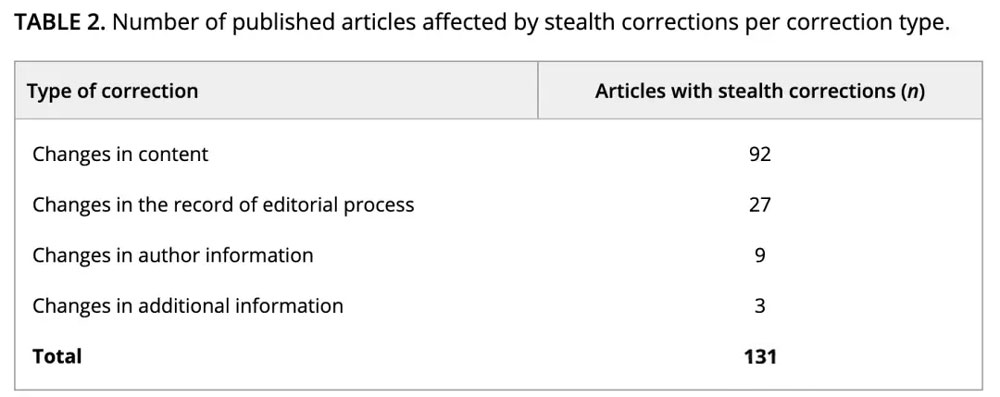

In a statement, an Elsevier spokesperson said, "We investigate submitted and published papers that are suspected of not disclosing the use of generative AI tools and correct the records when necessary." Not only that, Glynn and Guillaume Cabanac, a computer scientist at the University of Toulouse, and others found that in a paper published in February, there were more than 100 similar silent changes, some of which were related to the undisclosed use of AI.

The top journals with the most serious secret modifications are BAKIS Productions LTD, MDPI, and Elsevier, all of which are more than 20 times.

Among the types of modifications, the content was changed as many as 92 times.

Cabanac said that publishers need to act quickly to screen existing papers and establish a stricter review mechanism.

However, he also pointed out that such problems only account for a very small part of published research, and everyone should not shake their trust in science.