Recently, Andrej Karpathy, former Director of AI and Autonomous Vehicle Vision at Tesla and researcher at OpenAI, open-sourced nanochat, claiming it can train a "simple version of ChatGPT" for less than $100. Upon release, it has garnered 5.6k stars on GitHub.

▲nanochat Github homepage (Source: Github)

Open source address:

Github: https://github.com/karpathy/nanochat

Unlike its earlier nanoGPT implementation, which only covered pre-training, nanochat is a minimalist, full-stack training/inference pipeline built from scratch, implementing a "simple version of ChatGPT" with a minimal dependency library.

▲ Screenshot of Andrej Karpathy's tweet (Source: X)

Karpathy responded in the comments section, stating that nanochat's basic architecture is similar to Meta's Llama, but simplified and incorporating some improvements from modded-nanoGPT.

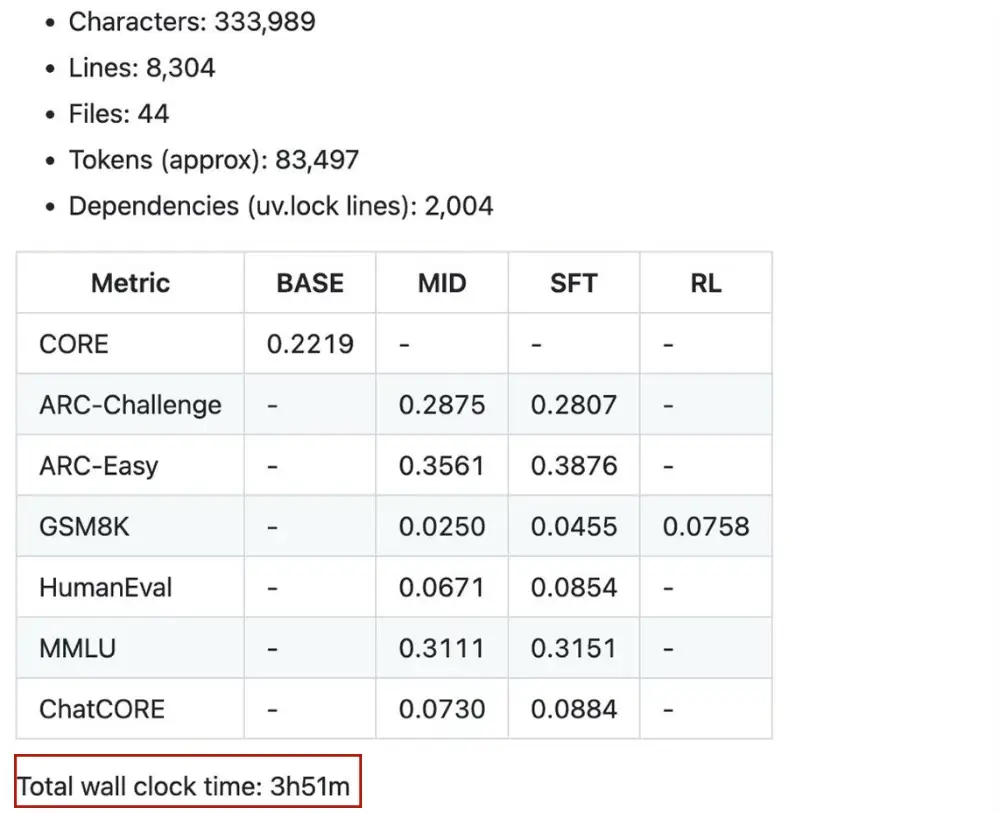

He also revealed that, up to the instruction fine-tuning (SFT) stage, the entire training took 3 hours and 51 minutes, with a total cost of $92.40. "That even left us $8 for an ice cream treat," he joked.

Notably, due to the current incomplete support for reinforcement learning (RL), Karpathy stated that he did not include this in the total runtime.

This means that developers can simply launch a cloud GPU instance and run a single script for less than $100, in as little as four hours, to train a "simple version of ChatGPT" capable of engaging in simple conversations, composing stories and poems, and answering basic questions.

After about 12 hours of training, the model surpasses GPT-2 in the CORE metric, which assesses fundamental capabilities such as the model's reasoning ability and knowledge base. Karpathy also revealed that when the investment increases to approximately $1,000 and after 41.6 hours of training, the model's performance improves significantly, enabling it to solve basic math/programming problems and pass multiple-choice tests.

For example, after 24 hours of training (equivalent to the computational load of GPT-3 Small 125M, or about one thousandth of GPT-3), a model with a depth of 30 achieved scores exceeding 40 on the multi-task language comprehension benchmark MMLU, exceeding 70 on the simple commonsense reasoning task ARC-Easy, and exceeding 20 on the mathematical reasoning benchmark GSM8K.

▲nanochat Performance Chart (Source: Github)

This 8,304-line project implements the following features:

- Tokenizer training using a new Rust implementation;

- Pre-training a Transformer LLM on the FineWeb dataset and evaluating it across multiple dimensions using the CORE metric;

- Mid-term training using SmolTalk user-assistant conversation data, multiple-choice question data, and tool usage data;

- Fine-tuning instructions and evaluating the chat model on benchmarks such as general knowledge multiple-choice questions (ARC-E/C, MMLU), mathematics (GSM8K), and code (HumanEval);

- Optional reinforcement learning using the "GRPO" algorithm on the GSM8K dataset;

- Efficient inference achieved through an engine with a key-value cache, supporting simple pre-population/decoding and tool invocation (a Python interpreter in a lightweight sandbox), and interactive via a CLI or a ChatGPT-like web interface;

- Generates a single-page Markdown evaluation report summarizing the entire process in a gamified manner.

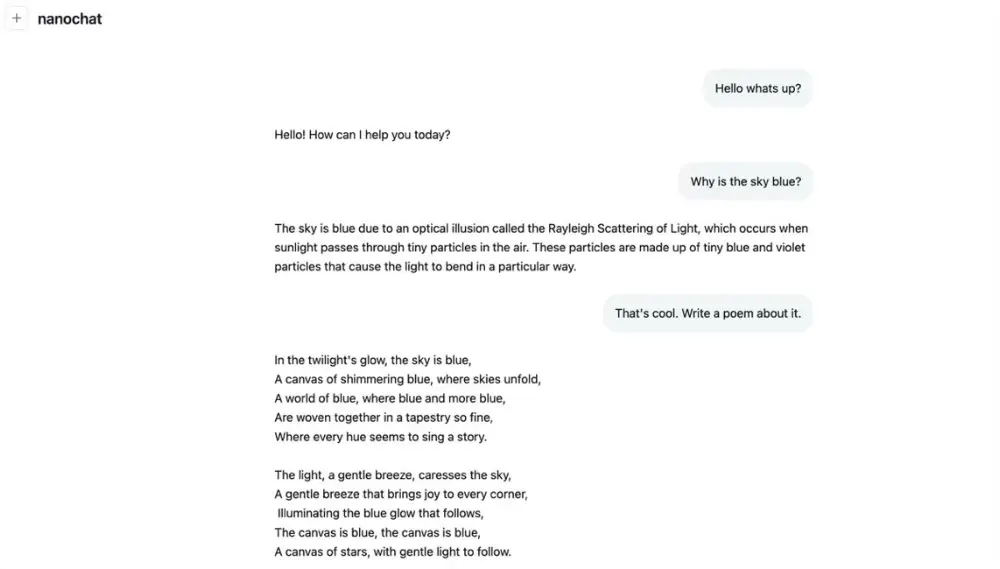

In the comments section of the original tweet, Karpathy also provided an example of a conversation between nanochat and a user. In this conversation, the basic version of nanochat already implements conversational AI chatbot functionality and can even compose poetry upon request.

▲ Nanochat Functionality Demonstration (Source: X)

Karpathy's tweet received widespread praise from netizens, with some calling it "very inspiring" and even saying, "This person (referring to Karpathy) is simply a legend."

▲ Excerpts from comments (Source: X)

Some netizens have even created interactive, live code maps for nanochat, allowing for more intuitive exploration of the codebase.

Conclusion: Nanochat Provides a Reference for Cost Control in AI Development

The launch of the nanochat project provides a new reference for cost control in AI model development. This project demonstrates that, with appropriate architectural design and process optimization, it is technically feasible to implement basic conversational AI functionality at a cost of $100.

While the current version still lags behind large commercial models in performance, its cost-effectiveness expands the application possibilities of AI technology in a wider range of scenarios. As the open source community continues to optimize the project, this efficient development model may provide new impetus for the popularization of AI technology.