When Nataliya Kosmyna, a research scientist at the MIT Media Lab, released a preliminary, non-peer-reviewed study in June, she probably didn't anticipate the global furor it would ignite. The study, which used electroencephalograms to monitor the brain activity of 54 Boston-area college students while they wrote, yielded startling results: brain connectivity among students who used ChatGPT was 55% lower than that of those who wrote solely on their own, and 48% lower for those who used Google searches. Even more disturbing, when the students who had become accustomed to using ChatGPT were later asked to write independently, their brain activity rebounded, but never reached the levels of those who had never used AI.

Image | Related Paper (Source: arXiv)

The study quickly made headlines and sparked heated debate. Some exclaimed that it proved AI was "corrupting our brains," while others dismissed it as just another moral panic about new technologies. In an in-depth report published in mid-October, The Guardian took the discussion to a higher level with the pointed question, "Are we living in a golden age of stupidity?" In today's world of information overload, attention deficit, and ubiquitous AI, this question deserves our serious consideration.

Kosmyna's research revealed more than just reduced brain activity. In the experiment, students using ChatGPT had little recall of what they had just "written" after completing three essays—to be precise, 83% couldn't accurately cite the essays they had written minutes earlier, compared to only 11% of students who wrote solely mentally or used search engines. Two English teachers, unaware of the source of the essays, used a harsh word when evaluating the AI-assisted essays: "soulless." The essays were remarkably homogeneous in structure and content, using identical expressions and viewpoints, as if they had been cast from the same mold.

Kosmyna calls this phenomenon "cognitive debt"—a debt that accumulates unnoticed when AI makes it more difficult to develop and maintain independent thinking. She observed that by the third essay, ChatGPT users were increasingly adopting a "just give me the answer, fix this sentence, and edit it!" model, replacing thought with pure copy-and-paste. This isn't an improvement in efficiency, but rather an outsourcing of cognitive processes.

This discovery forces us to confront a more fundamental question: What is actually happening in our brains as we embrace the conveniences brought by AI?

01. Nothing New Under the Sun

But before we fall prey to technological fatalism, let's review history. Every major information technology has been accompanied by similar fears.

Around 370 BC, Plato recorded Socrates' warning about the new technology of writing in his Phaedrus. When the Egyptian god Thoth presented the invention of writing to King Tammuz, the king rejected it, arguing, "If men learn this, it will implant forgetfulness in their souls. They will no longer train their memories, for they will rely on external symbols rather than on internal recollection." Socrates argued that writing creates "the appearance of wisdom, but not true wisdom," because "whatever you ask of it, it will only repeat the same words," incapable of interaction or dialectic.

The irony of history is that we know Socrates opposed writing precisely because Plato wrote it down.

In 1440, after Gutenberg invented movable metal type, the scribes' guild destroyed the printing presses and drove booksellers from town. In 1565, Swiss scholar Conrad Gesner warned that the "uncontrollable flood of information" would "confound and harm" the mind. By the 18th century, concerns were raised about "reading addiction" and "reading mania"—perceived as dangerous diseases affecting young people. The 19th-century pulp novels known as "penny dreadfuls" were blamed for leading young boys to murder and suicide.

In 1975, the introduction of calculators into schools sparked heated debate. At the 1986 National Council of Teachers of Mathematics convention, educators protested their use in elementary schools, fearing that students would "take shortcuts" and fail to develop mental arithmetic skills. The opponents' slogan was, "Buttons mean nothing until the brain is trained." However, research ultimately showed that students who used calculators "systematically had better arithmetic understanding and fluency" because calculators allowed them to explore more complex problems and improve their conceptual understanding.

Each time, the panic proved overblown. The printing press didn't destroy memory or thinking; it democratized knowledge, spurring the Enlightenment and the Scientific Revolution. Calculators didn't create a generation of people who couldn't do arithmetic; they freed students to tackle higher-level mathematical concepts. So, is AI just another example of history repeating itself?

02. Is this time really different?

However, many researchers point out that AI differs fundamentally from past tools, differences that may make the comfort of history less reliable.

Historical tools were assistive—they extended existing human capabilities, but humans still controlled the process. Writing expanded memory storage, and calculators expanded computational power, but their use presupposed knowing what you wanted to store or compute. AI, on the other hand, is autonomous—it makes independent decisions based on algorithms that humans may not understand, and it can learn and adapt. When you ask ChatGPT a question, it doesn't simply retrieve information; it generates new text. This process involves complex calculations of probability distributions and pattern recognition, and its underlying logic remains a black box to most users.

Historical tools were passive, requiring human guidance and interpretation. AI is active—it predicts, suggests, and even creates. It doesn't just store your thoughts; it generates new text, images, and code. This isn't about extending cognition, but potentially replacing it. Historical tools are transparent, their functions visible and understandable. AI is opaque—the decision-making process of "black box" algorithms is often unexplainable. We may not know why AI gives a certain answer, and even AI developers often cannot fully explain the model's behavior.

Critically, historically, tools have been domain-specific, each with a clear, limited purpose. AI is general-purpose—it can perform a wide range of cognitive tasks across domains, from writing to programming, from artistic creation to scientific research. This versatility means that AI's impact isn't limited to a single cognitive function but has the potential to permeate nearly every area of thought.

However, this comparison may be oversimplified. The "extended mind theory," proposed by philosophers Andy Clark and David Chalmers in 1998, offers a more insightful perspective. They argue that cognition is not confined to the brain or even the body but extends to the environment through tools and technology. Their famous thought experiment involves two fictional characters, Inga and Otto: Inga has an excellent memory and can recall the address of a museum directly from her mind. Otto, on the other hand, suffers from Alzheimer's disease and keeps all important information in a notebook he carries with him. When he wants to visit a museum, he naturally opens the notebook to look up the address.

Clark and Chalmers argue that, functionally, Otto's notebook plays the same role for him as Inga's biological memory does for her. It is a reliable, readily accessible source of information, an integral part of his cognitive processes. Therefore, Otto's mind has effectively "extended" into the notebook. The only difference is the medium of information (paper vs. neurons), not the cognitive function itself.

According to this logic, smartphones, GPS, calculators, and even AI are not just tools; they are part of our cognition. "We are already cyborgs" because our minds have extended beyond our skulls. This theory also echoes the idea proposed by Canadian communication scholar Marshall McLuhan in the 1960s: media are extensions of man. McLuhan argued that "the medium is the message," meaning that the form and characteristics of the medium itself, more than the content it conveys, shape our cognitive patterns and social structures.

From this perspective, the question is not whether AI will become part of our cognition—it already is. The real question is: Does this extension enhance or diminish our innate abilities? The answer depends on how we use them.

03. Attention and the Anxiety of "Stupidity"

From writing to AI, these tools have undoubtedly greatly improved our efficiency in processing information. But as knowledge acquisition becomes almost instantaneous, another key question arises: Is this efficiency eroding the foundation necessary for deep thinking—the ability to focus?

This contradiction was accurately foreseen over half a century ago.

In 1971, Nobel Prize-winning economist Herbert Simon coined the concept of the "attention economy": "In an information-rich world, the abundance of information means a scarcity of something else: a scarcity of the attention of information consumers." Today, this theory is more concrete than ever. A 2016 Microsoft study showed that the average human attention span plummeted from 12 seconds in 2000 to 8.25 seconds in 2015, even shorter than that of a goldfish (9 seconds). In a few years, this number will likely be even smaller.

Image | Herbert Simon (Source: Wikipedia)

Tech consultant Linda Stone coined the term "continuous partial attention" in the late 1990s to describe the state we often find ourselves in in the digital age: trying to focus on multiple things simultaneously, yet never fully focusing on any one of them. Whether we're sneaking a check on our email during a Zoom meeting, scrolling through our phones while watching Netflix, or glancing at notifications while playing with our kids—we think we're effectively multitasking, but in reality, we're in a state of perpetual cognitive overload. Stone's research found that 80% of people experience "screen apnea" while checking email: they become so absorbed in the endless stream of notifications that they forget to breathe properly. This constant state of alertness activates the fight-or-flight system, making us more forgetful, less decisive, and more distracted.

As marketing guru Seth Godin recently argued, attention is becoming a luxury. "Luxury goods are special in that they are scarce and expensive, and they confer status on certain people because they signal that we've paid more than necessary." Reading a nonfiction book in its entirety, listening to a public radio program, attending a concert—these very acts have become symbols of luxury. Godin writes, "By 'wasting' our attention on details, narratives, experiences, and everything else besides our to-do list, we send a message to ourselves and others—a message about allocating our time to things beyond optimizing performance or survival."

Godin's perspective forces us to reexamine the very question of "Are we becoming dumber?" If the ability to focus is becoming a scarce "luxury," is the anxiety about losing it primarily concentrated among those who have the means to enjoy this "luxury"? In his analysis of cultural capital, French sociologist Pierre Bourdieu noted that so-called "good taste" and "cultural accomplishment" have never been neutral value judgments but rather tools of class distinction. To some extent, today's attention span and deep thinking abilities are also like this.

In a discussion about the Guardian report, one user stated that the title itself was "insulting," adding, "Without even reading the article, I can sense the triumphant arrogance of 'we're living in a stupid age.'" Another user responded that when he saw the title, he immediately thought of the hours he'd spent watching short videos, trying to understand cryptocurrency, pondering the impact of AI on education, and trying to figure out where things were going wrong in his life. "I think it's more of a criticism of the systems we've built—primarily big tech companies, but also the entire industrial complex—that have created a world where the answer is likely 'yes.'"

Yes, "stupidity" isn't an individual flaw, but a systemic product.

When our digital devices are designed to maximize engagement rather than comprehension, when algorithms optimize for clicks rather than engagement, and when "frictionless user experience" becomes the golden rule for tech products—then the cognitive problems of ordinary users can't be attributed simply to laziness or a lack of self-control, but rather to the fact that the entire digital ecosystem is fundamentally not designed to foster deep cognition.

04. The Necessity of Friction

Cognitive psychologist David Geary once distinguished human cognitive abilities into "biologically primary abilities" (such as language acquisition, which evolve naturally) and "biologically secondary abilities" (such as reading and mathematics, which require deliberate learning). Higher-order thinking doesn't emerge spontaneously; it requires the "scaffolding" of memory and practice. Without this foundation, potential withers.

In an interview, Kosmyna repeatedly emphasized the point: "Our brains love shortcuts; it's in our nature. But your brain needs friction to learn. It needs to have a challenge." This concept of "friction" is key to understanding the impact of AI on cognition.

Ironically, the modern technology industry's promise is precisely to create a "frictionless user experience"—ensuring that we encounter no friction as we swipe from app to app and screen to screen. This design philosophy has undoubtedly been commercially successful, allowing us to unthinkingly outsource more and more information and work to digital devices, allowing us to easily fall into the trap of the internet, and allowing generative AI to be so rapidly integrated into our lives and work. If they are excluded, users themselves will become obsolete and left behind—as evidenced by employees fired for "not knowing how to use AI."

Figure | Employees laid off for "not embracing AI quickly enough" (Source: Fortune)

But learning requires friction. Memories require effort, deepening understanding requires struggle, and cultivating creativity requires exploration amidst difficulty. When AI eliminates all this friction—when it makes writing unnecessary for organizing thoughts, programming unnecessary for understanding logic, and problem-solving unnecessary for truly thinking—we gain convenience but lose opportunities for cognitive development.

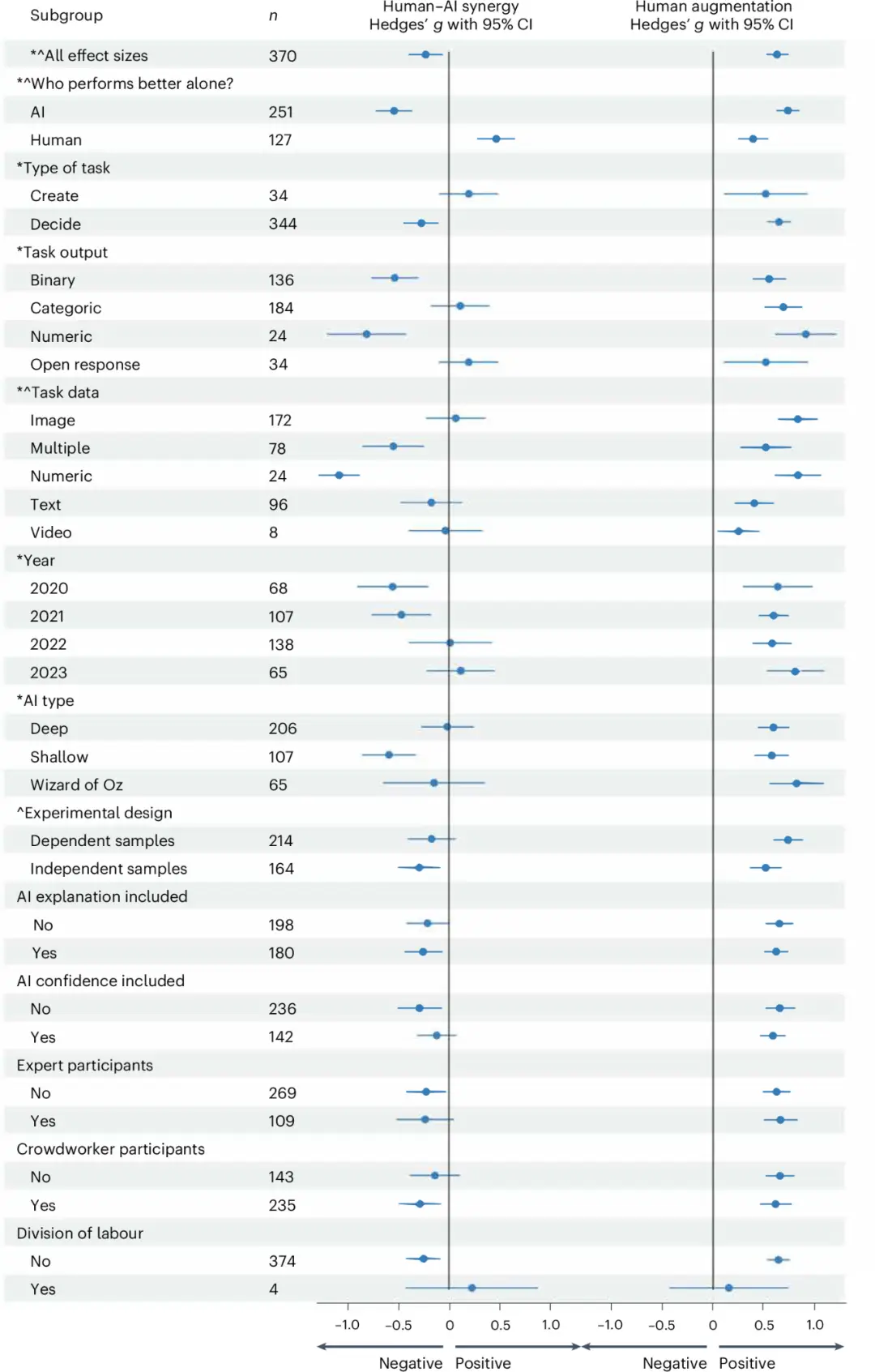

A large-scale meta-analysis published in the journal Nature Human Behavior in 2024, combining data from 106 experiments and 370 effect sizes, revealed the true nature of human-machine collaboration. The study found that not all human-machine combinations are beneficial. For creative tasks, human-machine collaboration showed positive results, with a human augmentation effect of 0.64—humans plus AI can outperform humans working alone by 64%. But the key difference is this: when humans actively engaged, critically evaluating AI output, and using it as a conversational partner rather than an answering machine, the effect was positive. Those who simply copied and pasted performed poorly.

Figure | Related Research (Source: Nature Human Behavior)

This is precisely the most promising finding in Kosmyna's research. In the fourth phase of the experiment, the researchers had students who initially wrote independently transition to using ChatGPT. The surprising result: these students' brain activity actually increased. Kosmyna attributes this to curiosity and active engagement with the new tool. This suggests that timing is crucial: "These findings support an educational model that introduces AI integration only after learners have demonstrated sufficient independent cognitive effort."

In other words, the problem isn't AI itself, but at what stage of cognitive development and in what ways we use it. Someone who has already mastered basic writing skills, able to organize thoughts and construct arguments independently, may benefit from using AI to expand their horizons and explore new forms of expression. However, someone who hasn't yet built these foundational abilities may never develop them if they rely on AI too early. This isn't the technology's fault, but how we use it.

05. Our Choices

Back to the original question: Are we living in a golden age of stupidity? The answer is: perhaps, but it's not inevitable.

The evidence is compelling that we are at a cognitive crossroads. Decreased brain connectivity, shortened attention spans—these are real trends that deserve serious attention. But they aren't simply a "technology is making us stupider" story. History shows us that tech panics are often exaggerated and that society is remarkably adaptable. But history also shows us that McLuhan was right: media do reshape our cognitive processes, even if they don't destroy them. We didn't become stupider because of printing, but we did stop developing certain memory skills. We didn't become stupider because of calculators, but we did change the focus of math education.

What makes AI different is its autonomy, opacity, and versatility. It doesn't just extend our capabilities; it has the potential to replace our cognitive processes themselves. But this depends on how we design and use it. Christodoulou's "stupid society" isn't AI's destiny, but the result of poor design and poor choices. If we design AI tools to encourage passive consumption rather than active participation, if we rely on AI before building a foundation, if we optimize for engagement rather than comprehension, then yes, we will become dumber.

But another path is possible. Kosmyna's research actually offers a clue: students who first built a solid writing foundation and then used ChatGPT showed increased brain activity, not decreased. This suggests that the key isn't whether to use AI, but when and how to use it. Once a person has learned how to organize their thoughts, construct arguments, and critically evaluate information, AI can become a powerful augmentation tool, helping them explore more possibilities and handle more complex tasks.

Socrates worried that writing would make us forgetful, but Plato wrote about those concerns, and we're still reading, pondering, and debating them today. Technology has changed us, but it hasn't destroyed us. The key is whether we can continue to be like Plato: using tools without being used by them; expanding our minds without abandoning our core; embracing the future without forgetting the fundamentals.

More than half a century after Herbert Simon pointed out the scarcity of attention, what's even more scarce today is deep cognitive ability—the ability to discern truth from fiction amidst a deluge of information, to maintain focus amidst superficial stimulation, and to sustain independent thought amidst AI prompts. This isn't about resisting technology, but about consciously shaping our relationship with it.

Perhaps this isn't an age of stupidity, but an age of choice—although this very choice is a luxury. Being able to proactively decide how to use AI, to consciously create friction in a frictionless digital world, and to "waste" time on deep thought all require resources, privilege, and cultural capital. But acknowledging this doesn't mean we should abandon these choices. On the contrary, this conscious choice—to be an active user rather than a passive consumer, to use AI to augment rather than replace our cognition, to let our thinking grow amidst friction—may be one of the few ways we have to combat technological alienation.

This is the real question. And the answer will be written by each of us, with every click, every prompt, every choice between deep thought and shallow swiping. As Kosmyna insists in the face of media overinterpretations, she avoids using words like "stupid," "dull," or "brain rot" to describe the impact of AI, as these words undermine the work her team is doing. What's truly needed is "very careful consideration and ongoing research."

In this sense, the greatest value of Kosmyna's research isn't in providing a definitive answer, but in raising a pressing question: As we outsource more and more thinking to machines, we need to ensure we still know how to think. The most frightening thing isn't that we become dumber, but that we lose the ability to recognize when we're doing so.